Hey,

While your competitors are hemorrhaging cash on closed-source APIs charging $0.08 per image, a silent revolution is happening. Five open-source models have just obliterated the cost barrier to enterprise-grade image generation.

Let me show you exactly what you're missing...

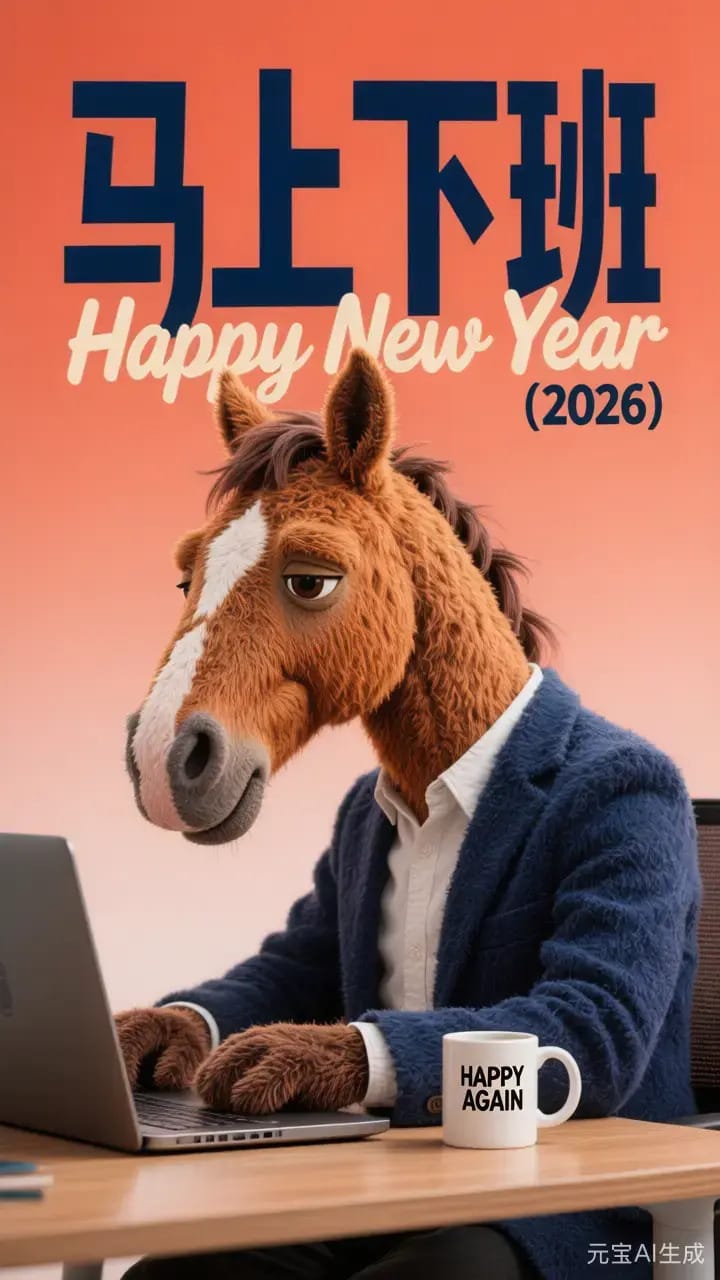

1. Z-Image Turbo

Generated using z-image turbo

"Alibaba's Consumer GPU Champion: 6B Parameters, 8 Steps, Sub-Second Speed"

Everything we've covered so far has been powerful but hungry; requiring enterprise GPUs with 40-80GB VRAM.

Z-Image Turbo changes the game: High-quality photorealistic generation on 16GB consumer GPUs.

🔥 Verified Technical Specs

Parameters: 6 billion

Developer: Alibaba Cloud (Tongyi-MAI)

Architecture: Scalable Single-Stream DiT (S3-DiT)

Inference Steps: 8 maximum (configurable down to 1)

VRAM Requirement: 16GB (consumer hardware compatible)

Speed: Sub-second on H800 GPUs, ~2-4 seconds on RTX 4090

Training Method: Decoupled-DMD distillation + DMDR reinforcement learning

Special Features: Bilingual text rendering (English & Chinese)

Released: November 27, 2025

💎 What Makes It Absolutely Killer:

1. Runs on Consumer Hardware

This is the democratization moment. Z-Image Turbo fits comfortably within 16GB VRAM, meaning you can run it on:

✅ RTX 4090 (24GB): perfect performance

✅ RTX 4080 (16GB): works great

✅ RTX 3090 (24GB): older but capable

✅ Even some high-end laptops with RTX 4080/4090 mobile

2. Photorealistic Quality at Lightning Speed

Don't mistake "consumer-friendly" for "low quality." Z-Image Turbo achieves:

✅ Sub-second inference on enterprise H800 GPUs

✅ 2-4 seconds on consumer RTX 4090

✅ Only 8 inference steps (vs. 20-50 for traditional models)

✅ Photorealistic skin texture and fine details

3. Bilingual Text Rendering Champion

Z-Image Turbo excels at accurately rendering complex Chinese and English text; a notoriously difficult task for image models.

4. State-of-the-Art Among Open-Source Models

According to Elo-based Human Preference Evaluation on Alibaba AI Arena, Z-Image Turbo shows "highly competitive performance against other leading models, while achieving state-of-the-art results among open-source models."

2. HunyuanImage-3.0-Instruct

HunyuanImage-3.0: Complex Scene Understanding

"Tencent's Thinking Machine: The Only Model That Reasons About Images"

Here's something wild: What if an image generator could think before it creates?

HunyuanImage-3.0-Instruct is the world's first native multimodal model with built-in Chain-of-Thought (CoT) reasoning. It doesn't just generate images; it understands context, analyzes your intent, and reasons through complex creative tasks.

🔥 Verified Technical Specs

Parameters: 80 billion total (13 billion activated per token)

Architecture: Mixture-of-Experts (MoE) with 64 experts

Type: Native multimodal (unified understanding + generation)

Reasoning: Built-in Chain-of-Thought (CoT) capability

Developer: Tencent

License: Open-source

Released: January 26, 2026 (Instruct version)

Languages: Bilingual (English/Chinese)

💎 What Makes It Absolutely Killer:

1. Chain-of-Thought Reasoning (The Killer Feature)

This is unprecedented. Before generating an image, HunyuanImage-3.0-Instruct thinks:

What this means:

Analyzes your input image and prompt

Breaks down complex editing tasks into structured steps

Expands sparse prompts into comprehensive instructions

Considers subject, composition, lighting, color palette, and style

Then generates based on this reasoned understanding

2. The Largest Open-Source Image MoE Model

With 80 billion parameters and 64 experts, this is the largest open-source image generation Mixture of Experts model to date.

3. Unified Multimodal Architecture

Unlike most models that bolt image understanding onto generation, HunyuanImage-3.0 unifies both in a single autoregressive framework. This means:

Better context understanding

More coherent image editing

Superior multi-image fusion

Stronger prompt adherence

4. Comprehensive Task Support

HunyuanImage-3.0-Instruct isn't just text-to-image. It handles:

Text-to-Image (T2I): Professional-grade generation from text

Image-to-Image (TI2I): Creative editing, style transfer, element addition/removal

Multi-Image Fusion: Combine up to 3 reference images into coherent compositions

Prompt Self-Rewrite: Automatically enhance vague prompts into detailed descriptions

Image Understanding: Visual question answering and analysis

3. Qwen-Image-2512

"Alibaba's December Monster: Photorealism That Makes Your Jaw Drop"

Remember when AI-generated images had that telltale "plastic" look? That weird smoothness that screamed "fake"?

Qwen-Image-2512 just murdered that problem. Released December 31, 2025, this is Alibaba's gift to the open-source community; and it's currently the strongest open-source model on AI Arena.

🔥 Verified Technical Specs

Model Name: Qwen-Image-2512 (update of original Qwen-Image)

Developer: Alibaba Cloud (Tongyi-MAI team)

Resolution: Native 2512×2512 pixels (supports up to 2048px on either axis)

Architecture: Diffusion-based with enhanced realism pipeline

License: Open-source

Released: December 31, 2025

Bilingual: Optimized for both English and Chinese prompts

💎 What Makes It Absolutely Killer:

1. Human Realism That's Actually Human

The December update focused obsessively on one thing: making people look real. Not magazine-cover perfect; real. With pores, natural skin texture, individual hair strands.

2. Ranked #1 on AI Arena (10,000+ Blind Tests)

After over 10,000 rounds of blind human evaluation on AI Arena, Qwen-Image-2512 ranks as the strongest open-source image model, staying competitive with closed-source alternatives.

3. Finer Natural Details

It's not just humans. Qwen-Image-2512 renders:

Individual strands of animal fur with realistic texture

Water flow and mist with photographic quality

Foliage with natural color gradations

Landscape details that rival professional photography

4. Improved Text Rendering

Already a strength of the original model, 2512 takes it further. It can generate complete PowerPoint slides with accurate Chinese characters, complex infographics, and professional educational posters.

4. FLUX.1 [schnell]

"The Speed Demon With True Commercial Freedom"

Let's cut through the noise: Most "open-source" models have license restrictions that kill commercial use. FLUX.1 [schnell] doesn't play that game.

Released under a true Apache 2.0 license, this is the model you can actually use for personal, scientific, AND commercial purposes without legal nightmares.

🔥 Verified Technical Specs

Parameters: 12 billion

Developer: Black Forest Labs

Architecture: Rectified flow transformer with latent adversarial diffusion distillation

Inference Speed: 1-4 steps (guidance_scale=0.0)

License: Apache 2.0 (fully open-source for commercial use)

Resolution: Flexible aspect ratios

Released: August 2024

💎 What Makes It Absolutely Killer:

1. True Apache 2.0 Freedom

This isn't some "non-commercial only" license buried in fine print. FLUX.1 [schnell] is released under the actual Apache 2.0 license; the same license used by Android, Kubernetes, and countless enterprise projects.

What this means for you:

✅ Use it in commercial products

✅ Modify and distribute the code

✅ No revenue caps or usage restrictions

✅ No requirement to share your modifications

✅ No attribution requirements in your product

⚡ Legal Clarity: The Apache 2.0 license is one of the most permissive and well-understood licenses in enterprise software. Your legal team won't lose sleep over this one.

2. Blazing Fast Inference

The "schnell" isn't just branding; it's German for "fast." And fast it is.

FLUX.1 [schnell] was trained using latent adversarial diffusion distillation, allowing it to generate high-quality images in just 1-4 steps. Compare that to traditional diffusion models requiring 20-50 steps.

Real-world speed:

On an A100 GPU: ~1-2 seconds per image (4 steps)

On a consumer RTX 4090: ~3-5 seconds per image

On older hardware (RTX 3090): ~8-12 seconds per image

3. Quality That Competes With Closed-Source

Despite the speed, FLUX.1 [schnell] delivers "cutting-edge output quality and competitive prompt following, matching the performance of closed source alternatives" according to the official model card.

4. Production-Ready Ecosystem

FLUX.1 [schnell] isn't some research project; it's battle-tested in production:

Native integration with Hugging Face Diffusers

ComfyUI support for node-based workflows

API endpoints from multiple providers (fal.ai, replicate.com, mystic.ai)

Active community and extensive documentation

5. GLM-Image

"The Knowledge Beast That Renders Complex Information Like Magic"

Here's the truth nobody's telling you: Most AI image generators fail spectacularly when you ask them to create something with actual knowledge; technical diagrams, educational posters, infographics with precise data.

GLM-Image is different. It's the first open-source, industrial-grade discrete auto-regressive model that actually understands what you're asking for.

🔥 Verified Technical Specs

Parameters: 16 billion (9B auto-regressive + 7B diffusion decoder)

Architecture: Hybrid auto-regressive + diffusion decoder

Based on: GLM-4-9B-0414 language model

Resolution: 1024px to 2048px native output

License: Open-source (check repository for specific terms)

Released: January 14, 2026

💎 What Makes It Absolutely Killer:

1. Text Rendering That Actually Works

On the CVTG-2k benchmark, GLM-Image achieved a Normalized Edit Distance (NED) of 0.9557 and 91.16% average word accuracy; beating every other open-source model including Qwen-Image-2512 (0.929 NED) and Z-Image (0.9367 NED).

2. Knowledge-Dense Generation

Need to generate a technical infographic explaining industrial processes? A slide deck with complex data visualization? GLM-Image excels where diffusion models fail; by actually understanding semantic relationships.

3. Beyond Text-to-Image

GLM-Image supports:

Image editing with natural language instructions

Style transfer while preserving content

Identity-preserving generation (same face, different contexts)

Multi-subject consistency across generations

⚖️ Head-to-Head Comparison

Which model is right for your use case?

Feature | GLM-Image | Qwen-2512 | FLUX.1 | Hunyuan-3.0 | Z-Image Turbo |

|---|---|---|---|---|---|

Parameters | 16B | Not disclosed | 12B | 80B (13B active) | 6B |

VRAM Required | ~32GB | ~13GB | ~16GB | ≥640GB (8×80GB) | 16GB |

License | Open-source | Open-source | Apache 2.0 | Open-source | Open-source |

Best For | Knowledge-dense tasks | Photorealism | Speed + commercial use | Complex editing | Consumer hardware |

Speed (steps) | 50 | 50 | 1-4 | 50 | 8 |

Text Rendering | ⭐⭐⭐ | ⭐⭐⭐⭐⭐ | ⭐⭐ | ⭐⭐⭐⭐ | ⭐⭐⭐⭐ |

Image Editing | ✅ | ✅ | ❌ | ✅ (Advanced) | ✅ |

💡 Decision Framework:

Tight budget, need production-ready: Z-Image Turbo

Commercial product, need legal clarity: FLUX.1 [schnell]

Best photorealism, willing to invest: Qwen-Image-2512

Knowledge-intensive content (infographics, education): GLM-Image

Complex editing, enterprise application: HunyuanImage-3.0-Instruct

📧 Forward this to 3 entrepreneur friends who need to see this opportunity